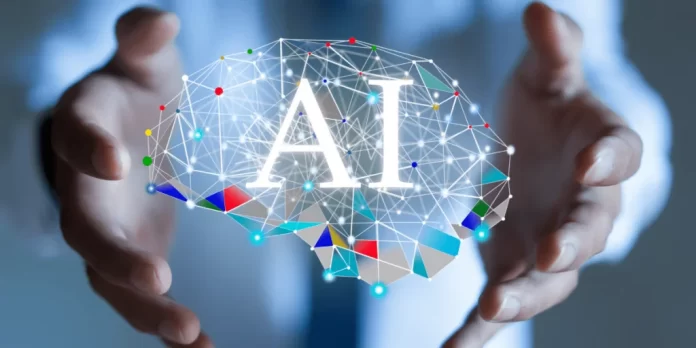

Artificial intelligence (AI) has recently incorporated itself into our daily lives in ways that we might not even be aware of. It has spread so widely that many people are still oblivious of its effects and how much we depend on it.

Our daily activities are mostly driven by AI technology from dawn to night. Many of us pick up our laptop or cell phone as soon as we wake up to begin our day. Our decision-making, planning, and information-seeking processes now all automatically involve doing this.

When we turn on our gadgets, we immediately connect to AI features like:

- Facial ID, picture recognition, and email programs, as well as social media

- Look up digital voice assistants on Google, such as Apple’s Siri and Amazon’s Alexa.

- Driving assistance for internet banking – route planning, traffic updates, and weather reports shopping for entertainment during downtime – like Netflix and Amazon for movies and television shows.

Today, AI permeates every part of our online personal and professional lives. A crucial aspect in business is and will continue to be global communication and networking. Making use of data science and artificial intelligence is crucial, and its potential growth trajectory is unbounded.

Even though AI is now nearly taken for granted, what precisely is it and how did it come about?

Artificial intelligence: What is it?

Unlike the natural intelligence exhibited by both humans and animals, AI is the intelligence expressed by machines.

The most intricate organ in the human body, the brain regulates all bodily processes and processes information from the environment. About 86 billion neurons make up its brain networks, which are connected by an estimated 100 trillion synapses. Neuroscientists are still working to understand and unravel many of its implications and capabilities.

The fundamental principles of AI are similar to how humans constantly change and learn. Machine intelligence technologies will advance as a result of human intelligence, creativity, knowledge, experience, and innovation.

When did artificial intelligence become a thing?

The work Alan Turing did at Bletchley Park to decipher German signals during the Second World War was a crucial turning point in science. His ground-breaking work aided in the creation of some computer science fundamentals.

Turing questioned whether machines could reason for themselves in the 1950s. This unconventional notion helped pave the way for numerous advancements in the discipline, along with the expanding applications of machine learning in problem solving. The fundamental question of whether robots could be programmed to do any of the following was investigated in research.

- think, comprehend, and learn to use their own ‘intelligence’ in problem-solving just like people do.

In the 1950s, computer and cognitive scientists like Marvin Minsky and John McCarthy became aware of this potential. Their work, which built on Turing’s, helped this field see exponential growth. Participants in a workshop held in 1956 at Dartmouth College in the USA set the groundwork for what is today known as the field of artificial intelligence. Many of those in attendance later rose to prominence as leaders and innovators in the field of artificial intelligence at one of the most esteemed academic research universities in the world.

As a testament to his ground-breaking work, the Turing Test is still utilized in modern AI research and is used to determine how successful AI initiatives and developments are.

What is the process of artificial intelligence?

Acquiring a tremendous amount of data is the foundation of AI. Then, using this data, information, patterns, and insights can be discovered. The goal is to construct and expand upon each of these building blocks and then apply the outcomes to fresh and uncharted situations.

Such technology is dependent on sophisticated machine learning algorithms, very sophisticated programming, datasets, databases, and computer architecture. Computational thinking, software engineering, and a focus on problem solving are some of the factors that contribute to the accomplishment of particular activities.

Artificial intelligence (AI) can take many different forms, from straightforward tools like chatbots used in customer service applications to sophisticated machine learning systems used by enormous corporations. The field encompasses a wide range of technologies, including:

(ML) Machine learning. ML describes computer systems that are able to learn and adapt without explicitly following instructions by using algorithms and statistical models. In machine learning (ML), three basic categories—supervised, unsupervised, and reinforcement learning—of conclusions and analyses are distinguished in data patterns.

Limited AI. Modern computer systems, particularly those that have been taught or learned to perform particular tasks without being expressly programmed to do so, depend on this. Virtual assistants on mobile devices, including those available on the Apple iPhone and Android personal assistants on Google Assistant, as well as recommendation engines that provide suggestions based on search or purchasing history are examples of narrow AI.

AGI stands for artificial general intelligence. The boundaries between science fiction and reality can seem hazy at times. AGI, as represented hypothetically by the robots in shows like Westworld, The Matrix, and Star Trek, has come to mean the capacity of intelligent machines that can comprehend and learn any task or procedure typically carried out by a human being.

Robust AI. AGI and this phrase are frequently used interchangeably. However, some academics and researchers in the field of artificial intelligence feel that it should only be used once robots have sensibility or consciousness.

NLP, or natural language processing. Computer science’s use of AI in this domain is difficult because it necessitates a massive amount of data. To teach intelligent machines how to comprehend how people write and communicate, expert systems and data interpretation are needed. Applications for NLP are being used more and more, for instance, in call centers and the healthcare industry.

Deepmind. Major technology companies are creating cloud services to target industries like leisure and recreation as they compete for the machine learning industry. AlphaGo, a computer program developed by Google’s Deepmind to play the board game Go, is one example, while Watson, a supercomputer developed by IBM, is best known for participating in a live, televised Watson and Jeopardy Challenge.

Careers in artificial intelligence

The usage of AI, data science, and automation will all grow. The big data gathering sector is expected to experience exponential growth up until 2023, according to forecasts for the data analytics market. Frost & Sullivan forecast growth of 29.7%, amounting to a startling $40.6 billion, in The Global Big Data Analytics Forecast to 2023.

As a result, there is a lot of unrealized potential and expanding professional opportunities. Professionals having the abilities to advance their organization’s goals are sought after by many top employers. Possible career paths include:

- Robotics and autonomous vehicles (such those produced by Waymo, Nissan, and Renault)

- Healthcare (for example, several uses in genetic sequencing research, the treatment of tumors, and the creation of instruments to expedite diagnostics of conditions like Alzheimer’s disease)

- Academics (MIT, Stanford, Harvard, and Cambridge are top universities for AI research)

- Retail (including AmazonGo stores and other cutting-edge purchasing possibilities)

- Banks and Finance

What is clear is that new professions and careers will be established to replace those lost as a result of every technological advancement.